4000-1200 B.C.

Inhabitants of the first known civilization in Sumer keep records of commercial transactions on clay tablets.

Ancient era

The abacus (plural abaci or abacuses), also called a counting frame, is a calculating tool which has been used since ancient times.

The abacus was early used for arithmetic tasks. What we now call the Roman abacus was used in Babylonia as early as 2400 BC.

The earliest known written documentation of the Chinese abacus dates to the 2nd century BC.

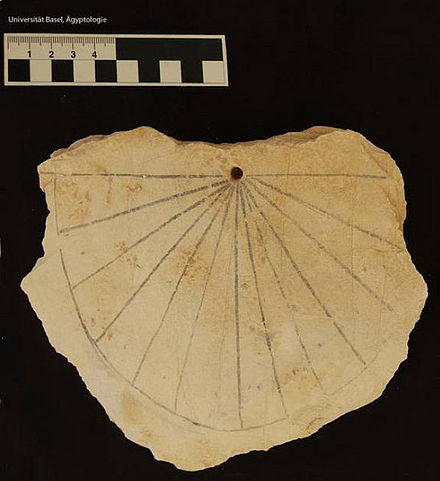

Sundials

The earliest sundials known from the archaeological record are shadow clocks (1500 BC or BCE) from ancient Egyptian astronomy and Babylonian astronomy.

World's oldest sundial, from Egypt's Valley of the Kings (c. 1500 BC)

South-pointing chariot, 1050-771 BC

The south-pointing chariot (or carriage) was an ancient Chinese two-wheeled vehicle that carried a movable pointer to indicate the south, no matter how the chariot turned.

Exhibit in the Science Museum in London, England. This conjectural model chariot incorporates a differential gear.

Exhibit in the Science Museum in London, England. This conjectural model chariot incorporates a differential gear.

An image of a south-pointing chariot from Sancai Tuhui (first published 1609)

Antikythera mechanism

The Antikythera mechanism (/ˌæntɪkɪˈθɪərə/, /ˌæntɪˈkɪθərə/) is an ancient Greek analogue computer and orrery used to predict astronomical positions and eclipses for calendarand astrological purposes decades in advance.

It could also be used to track the four-year cycle of athletic games which was similar to an Olympiad, the cycle of the ancient Olympic Games.

The instrument is believed to have been designed and constructed by Greek scientists and has been variously dated to about 87 BC, or between 150 and 100 BC, or to 205 BC, or to within a generation before the shipwreck, which has been dated to approximately 70-60 BC.

New calculating tools

Scottish mathematician and physicist John Napier discovered that the multiplication and division of numbers could be performed by the addition and subtraction, respectively, of the logarithms of those numbers.

Slide rule

The slide rule is a mechanical analog computer.

The slide rule was invented around 1620–1630, shortly after John Napier's publication of the concept of the logarithm.

Mechanical calculators

In 1642, while still a teenager, Blaise Pascal (19 June 1623(明.天启三年) - 19 August 1662(清.康熙元年) (aged 39)) started some pioneering work on calculating machines and after three years of effort and 50 prototypes he invented a mechanical calculator - also known as the arithmetic machine or Pascaline.

Step Reckoner, built by Gottfried Leibniz in 1694

Notable among these devices was the Step Reckoner, built by German polymath Gottfried Leibniz in 1694. Leibniz said “... it is beneath the dignity of excellent men to waste their time in calculation when any peasant could do the work just as accurately with the aid of a machine.”

The Step Reckoner was able to do this in an automated way, and was the first machine that could do all four of these operations.

Punched card data processing

In 1801, Joseph-Marie Jacquard (7 July 1752(清.乾隆17年) – 7 August 1834(清.道光14年)) developed a loom in which the pattern being woven was controlled by punched cards.

https://en.wikipedia.org/wiki/Jacquard_loom

This was a landmark achievement in programmability.

Calculators

By the 20th century, earlier mechanical calculators, cash registers, accounting machines, and so on were redesigned to use electric motors, with gear position as the representation for the state of a variable.

The world's first all-electronic desktop calculator was the British Bell Punch ANITA, released in 1961.

First general-purpose computing device

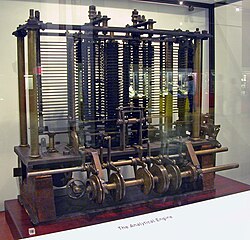

Charles Babbage(26 December 1791(清.乾隆56年) – 18 October 1871(清.同治10年)), an English mechanical engineer and polymath, originated the concept of a programmable computer. Considered the "father of the computer".

The Analytical Engine was a proposed mechanical general-purpose computerdesigned by Charles Babbage.The Analytical Engine incorporated an arithmetic logic unit, control flow in the form of conditional branching and loops, and integrated memory, making it the first design for a general-purpose computer that could be described in modern terms as Turing-complete. In other words, the logical structure of the Analytical Engine was essentially the same as that which has dominated computer design in the electronic era.

Analog computers

In the first half of the 20th century, analog computers were considered by many to be the future of computing.

An important advance in analog computing was the development of the first fire-control systems for long range ship gunlaying.

Advent of the modern computer

The principle of the modern computer was first described by computer scientist Alan Turing, who set out the idea in his seminal 1936 paper, On Computable Numbers. He also introduced the notion of a 'Universal Machine' (now known as a Universal Turing machine), with the idea that such a machine could perform the tasks of any other machine. Von Neumann acknowledged that the central concept of the modern computer was due to this paper.

Electromechanical computers

The era of modern computing began with a flurry of development before and during World War II. Most digital computers built in this period were electromechanical - electric switches drove mechanical relays to perform the calculation.

In 1939, the electro-mechanical bombes were built by British cryptologists to help decipher German Enigma-machine-encrypted secret messages during World War II.

Zuse's Z3

In 1941, German engineer Konrad Zuse followed his earlier machine up with the Z3. the world's first working electromechanical programmable, fully automatic digital computer. The Z3 was built with 2000 relays, implementing a 22 bit word length that operated at a clock frequency of about 5–10 Hz. Program code and data were stored on punched film. It was quite similar to modern machines in some respects, pioneering numerous advances such as floating point numbers. Replacement of the hard-to-implement decimal system (used in Charles Babbage's earlier design) by the simpler binary system meant that Zuse's machines were easier to build and potentially more reliable, given the technologies available at that time. The Z3 was probably a complete Turing machine.

Digital computation

The mathematical basis of digital computing was established by the British mathematician George Boole, in his work The Laws of Thought, published in 1854.

In the 1930s and working independently, American electronic engineer Claude Shannon and Soviet logician Victor Shestakov both showed a one-to-one correspondence between the concepts of Boolean logic and certain electrical circuits, now called logic gates, which are now ubiquitous in digital computers.

Electronic data processing

Purely electronic circuit elements soon replaced their mechanical and electromechanical equivalents, at the same time that digital calculation replaced analog.

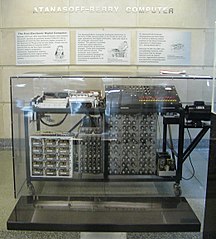

Machines such as the Z3, the Atanasoff–Berry Computer, the Colossus computers, and the ENIAC were built by hand, using circuits containing relays or valves (vacuum tubes), and often used punched cards or punched paper tape for input and as the main (non-volatile) storage medium.

Atanasoff–Berry Computer

Atanasoff–Berry Computer (ABC) in 1942, the first electronic digital calculating device: all-electronic, about 300 vacuum tubes, a mechanically rotating drum for memory.

The machine's special-purpose nature and lack of a changeable, stored program distinguish it from modern computers.

The machine was, however, the first to implement three critical ideas that are still part of every modern computer:

- Using binary digits to represent all numbers and data

- Performing all calculations using electronics rather than wheels, ratchets, or mechanical switches

- Organizing a system in which computation and memory are separated.

Telephone exchange network

The engineer Tommy Flowers at telecommunications branch of the General Post Office, in the 1930s, he began to explore the possible use of electronics for the telephone exchange.

Experimental equipment that he built in 1934 went into operation 5 years later, converting a portion of the telephone exchange network into an electronic data processing system, using thousands of vacuum tubes.

The electronic programmable computer

Tommy Flowers spent eleven months from early February 1943 designing and building the first Colossus.

Colossus was the world's first electronic digital programmable computer, but not Turing-complete. Colossus included the first ever use of shift registers and systolic arrays, enabling five simultaneous tests.

ENIAC (Electronic Numerical Integrator and Computer)

The US-built ENIAC was the first electronic programmable computer built in the US. Although the ENIAC was similar to the Colossus it was much faster and more flexible. It was unambiguously a Turing-complete device and could compute any problem that would fit into its memory. Like the Colossus, a "program" on the ENIAC was defined by the states of its patch cables and switches, a far cry from the stored program electronic machines that came later. Once a program was written, it had to be mechanically set into the machine with manual resetting of plugs and switches.

The stored-program computer

Early computing machines had fixed programs.

For example, a desk calculator is a fixed program computer.

Changing the program of a fixed-program machine requires re-wiring, re-structuring, or re-designing the machine.

A stored-program computer includes by design an instruction set and can store in memory a set of instructions (a program) that details the computation.

Stored-Program Theory

The theoretical basis for the stored-program computer had been laid by Alan Turing in his 1936 paper.

John von Neumann circulated his First Draft of a Report on the EDVAC in 1945. Although substantially similar to Turing's design and containing comparatively little engineering detail, the computer architecture it outlined became known as the "von Neumann architecture".

Von Neumann architecture

Manchester "baby"

The Manchester Small-Scale Experimental Machine, nicknamed Baby, the world's first stored-program computer, ran its first program on 21 June 1948.

The machine was not intended to be a practical computer but was instead designed as a testbed for the Williams tube, the first random-access digital storage device.

Manchester Mark 1

Manchester Mark 1 was operational by April 1949; a program written to search for Mersenne primes ran error-free for nine hours on the night of 16/17 June 1949.

The machine's successful operation was widely reported in the British press, which used the phrase "electronic brain" in describing it to their readers.

Commercial computers

The first commercial computer was the Ferranti Mark 1, built by Ferranti and delivered to the University of Manchester in February 1951. It was based on the Manchester Mark 1.

The main improvements over the Manchester Mark 1 were in the size of the primary storage (using random access Williams tubes), secondary storage (using a magnetic drum), a faster multiplier, and additional instructions.

IBM 650 - 900 kg

IBM introduced a smaller, more affordable computer IBM 650 in 1954 that proved very popular.

It cost US$500,000 ($4.39 million as of 2014) or could be leased for US$3,500 a month ($30 thousand as of 2014).

Microprogramming

In 1951, British scientist Maurice Wilkes developed the concept of microprogramming from the realisation that the Central Processing Unit of a computer could be controlled by a miniature, highly specialised computer program in high-speed ROM.

Microprogramming allows the base instruction set to be defined or extended by built-in programs (now called firmware or microcode).

Magnetic storage

By 1954, magnetic core memory was rapidly displacing most other forms of temporary storage, including the Williams tube. It went on to dominate the field through the mid-1970s.

- metal magnetic tape

- disk storage

Transistor computers

The bipolar transistor was invented in 1947. From 1955 onwards transistors replaced vacuum tubes in computer designs, giving rise to the "second generation" of computers.

A second generation computer, the IBM 1401, captured about one third of the world market. IBM installed more than ten thousand 1401s between 1960 and 1964.

Supercomputers

The Atlas Computer was one of the world's first supercomputers - considered to be the most powerful computer in the world at that time.

It was said that whenever Atlas went offline half of the United Kingdom's computer capacity was lost.

In the US, a series of computers at Control Data Corporation (CDC) were designed by Seymour Cray to use innovative designs and parallelism to achieve superior computational peak performance.

The CDC 6600, released in 1964, is generally considered the first supercomputer.

The integrated circuit

The next great advance in computing power came with the advent of the integrated circuit.

The first practical ICs were invented by Jack Kilby at Texas Instruments and Robert Noyce at Fairchild Semiconductor. “a body of semiconductor material ... wherein all the components of the electronic circuit are completely integrated.”

Post-1960: third generation and beyond

The explosion in the use of computers began with "third-generation" computers, making use of the integrated circuit (or microchip).

This led to the invention of the microprocessor.

the first single-chip microprocessor was the Intel 4004

Personal computer

In April 1975 at the Hannover Fair, Olivetti presented the P6060, the world's first personal computer with built-in floppy disk.

It was in competition with a similar product by IBM that had an external floppy disk drive.

MOS Technology KIM-1 and Altair 8800, were sold as kits for do-it-yourselfers, as was the Apple I, soon afterward.

the first programming language for the machine was Microsoft's founding product, Altair BASIC.

In the 21st century

multi-core CPUs

Semiconductor memory cell arrays

CMOS logic gates

Fiber-optic

system on a chip

Internet

…

This has allowed computing to become a commodity which is now ubiquitous, embedded in many forms, from greeting cards and telephones to satellites.

Supercomputing/Grid/cloud computing

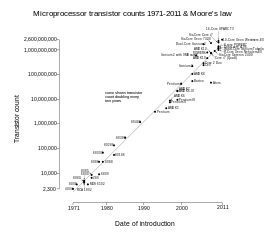

Moore's law

Moore's law is the observation that, over the history of computing hardware, the number of transistors in a dense integrated circuit doubles approximately every two years.

- The law is named after Gordon E. Moore, co-founder of the Intel Corporation, who described the trend in his 1965 paper.

- roughly a factor of two per year. 1965

- density-doubling would occur every 24 months. 1975

- Rather, David House, an Intel colleague, had factored in the increasing performance of transistors to conclude that integrated circuits would double in performance every 18 months.

Epilogue

An indication of the rapidity of development of this field can be inferred from the history of the seminal 1947 article by Burks, Goldstine and von Neumann. By the time that anyone had time to write anything down, it was obsolete. After 1945, others read John von Neumann's First Draft of a Report on the EDVAC, and immediately started implementing their own systems. To this day, the pace of development has continued, worldwide.

A 1966 article in Time predicted that: "By 2000, the machines will be producing so much that everyone in the U.S. will, in effect, be independently wealthy. How to use leisure time will be a major problem."